Having been in the ISP and hosting industries from roughly 1996-2014, living through the transition of mail servers from being something that everyone runs themselves as a matter of course to a nightmare of spam, block lists, and becoming the favorite entry point of threat actors that any rational person and company chooses to outsource to entities with far more resources to dedicate to the challenges, I’ve long scoffed at people who want to run mail servers at home.

Your ISP, the providers of mail services large and not-so-large, and the purveyors of block lists and anti-spam/threat gateway services all want to make this very difficult for you. Deliberately.

People find a way, often using the free tier of some bulk mail sending service to route their outbound mail, or perhaps getting lucky DIY-ing it with a VPS provider who has managed to keep their IPs off the naughty lists.

I prefer not to be a freeloading non-paying customer of anyone, as “free” services and “free tiers” over time tend to become more restrictive or disappear, and I know it’s foolish to believe any VPS provider that allows SMTP traffic isn’t going to eventually have a block list go nuclear on their entire IP space, so I’ve long chosen to pay Microsoft for their lowest-cost o365 account to have e-mail for my own domains and I think the $6/m or whatever is a fair price to pay.

But I’ve had this theory that one could use Microsoft’s Exchange Online Protection Plan, which costs just $1/m* per user, to cheaply avoid many of the pitfalls of self-hosting e-mail servers. Today I put it to the test with a domain I’d registered last week. Partly to prove the point and partly for the opportunity to mess around with Synology Mail Plus.

It absolutely worked. Here are the steps I followed:

- Installed Synology Mail Plus.

- Configured for my domain.

- Activated a single user.

- Configured SMTP Smart Host pointing to my VPS over a VPN tunnel.

- Configured port forwarding and firewall rules to only allow TCP/25 connections to the Synology from o365 IP ranges.

- Clicked ‘Buy Now’ at Exchange Online Protection Plan.

- Created a new o365 tenant account.

- Handed over my credit card info for the $1/m charges.

- Added my domain to o365 tenant.

- admin.microsoft.com -> Settings -> Domains.

- Let Microsoft make the changes to my Cloudflare DNS.

- Adjusted the automatically created SPF DNS record to include my VPS IP, along with my static IP for completeness.

- Change the Domain Type to InternalRelay.

- admin.exchange.microsoft.com -> Mail Flow -> Accepted Domains.

- Created Connectors for Inbound and Outbound e-mail.

- admin.exchange.microsoft.com -> Mail Flow -> Connectors.

- For Inbound I have a static IP and port 25 isn’t blocked, so I configured that to connect directly to my mail server.

- For Outbound I am blocked on port 25, and for the purposes of a Connector there are no alternate port options, so I proxy through the VPS using nginx.

- Outbound requires either having a static IP or using a certificate for auth. I went with IP auth.

- admin.exchange.microsoft.com -> Mail Flow -> Connectors.

- Enabled DKIM

- security.microsoft.com -> Policies & rules -> Threat policies -> Email authentication settings -> DKIM

- Added DKIM DNS records.

- Added DMARC DNS record

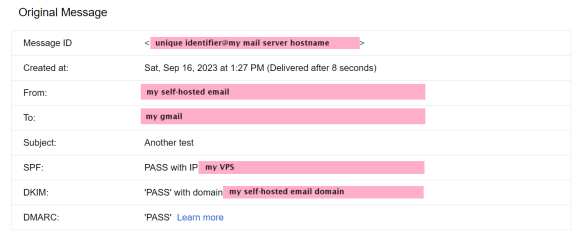

Microsoft could do a much better job of unifying all these different administration portals, but overall it wasn’t that bad. I tested by sending an e-mail to my Gmail account and it was successfully delivered to my Inbox, having passed SPF, DKIM, and DMARC:

And, mind you, this was a first message from a domain on a less-common .TLD, registered days ago with no prior reputation.

$1/m* seems pretty cheap to ride on Microsoft’s coattails for e-mail delivery, along with getting 1-day of inbound mail spooling, plus whatever value Exchange Online Protection provides as an anti-spam gateway. It’s not abusing the service in any way — this is what any cloud-based anti-spam service needs to function. EOP is special because Microsoft o365 services don’t have monthly minimum charges.

Of course, there are providers out there specifically offering inexpensive inbound MX spooling and outgoing SMTP relay services to the frugal self-hoster. Dynu, for example, is $9.99/yr per domain and service.

I might be halfway to talking myself into bringing all of my e-mail home. For my collection of domains and potential user count, EOP is dirt cheap.

* Plus the price of a VPS if dealing with port blocking, but we all need a VPS for something anyways, right? And that EOP license is “per user” but Microsoft won’t know how many users you have unless they ask you / audit your o365 licensing, and “Yep, just the X, it’s just for me [and my X-1 family members / friends / employees], and EOP is the only service needed right now” ought to be a perfectly acceptable response.